Make Google Calendar your Best Friend

We all need a best friend. At a professional level, a key attribute of a good friend is someone that elevates you and holds you accountable to your promises.

It doesn’t always take a human being to do that though. Google Calendar is absolutely wonderful if you’re someone that is either forgetful, unorganized, lack accountability or just need some structure in your routine.

I use Google Calendar religiously in my day to day life, and heavily advocate for it. Here are a few ways I use it.

Prerequisite

To use Google Calendar in the way I am suggesting, there are some requirements. First, you must have a Gmail account to be able to use Calendar. Second, you must turn on Calendar notifications with this associated account both on your browser/desktop and your mobile device – so that you are alerted promptly when action is required on your part. Finally -and this is the most element – you need to promise yourself that if you put something on the Calendar, you will adhere to it. It is crucial that you check your Calendar each day and see it as a to do list – at the exact time you allocate.

Accountability

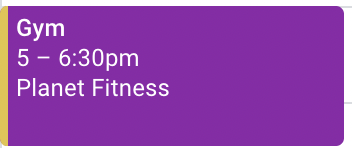

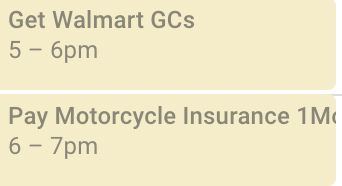

Have to pay bills? Or follow up with mail work? Throw it weekly or monthly on your Calendar, and take care of it when its planned. I even use it to make sure I go to the Gym each weekend. No Excuses!

Oh, a friend wants to go to dinner with you? Check your calendar. Either reschedule your prior commitment or reschedule the new one.

Joint Appointments

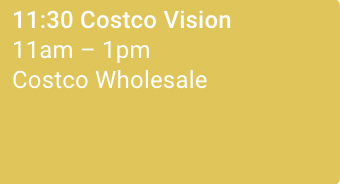

Sometimes the Wife and I schedule back to back appointments – or even dates. It’s just well organized if I throw it on the Calendar and add her as an invitee so that we’re both on the same page…or Calendar. I go as far as adding the address too!

Reminders

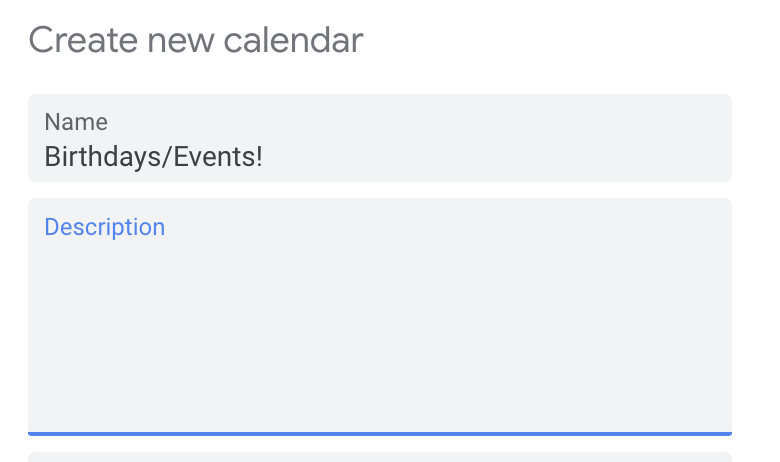

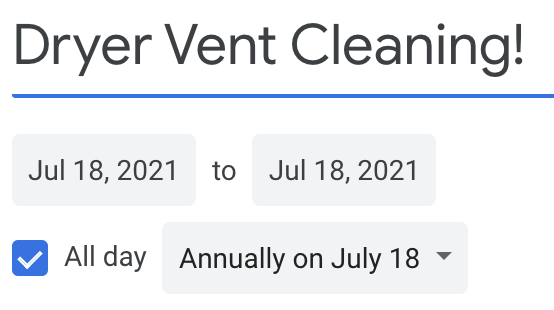

One of my favorite features is Reminders. Who invented birthdays anyway? It’s hard to keep track – especially if you don’t use social media as much as you should. The cool thing about Calendar is that not only can you create new Calendars to toggle on/off, but you can set recurring reminders for things like Birthdays, Vehicle and Home scheduled routine maintenance, etc!

To-Do Lists

Instead of putting together an arbitrary To-Do list (like Google Keep), I like to kill 2 birds with one stone. Why not just throw your task directly on Calendar for when you’d like to tackle it? That way, it’s on a list…and it’s on a schedule – YOUR schedule.

With that said, I hope you made a new friend after reading this article. Give it a shot. Once you start using Calendar, you won’t go back!